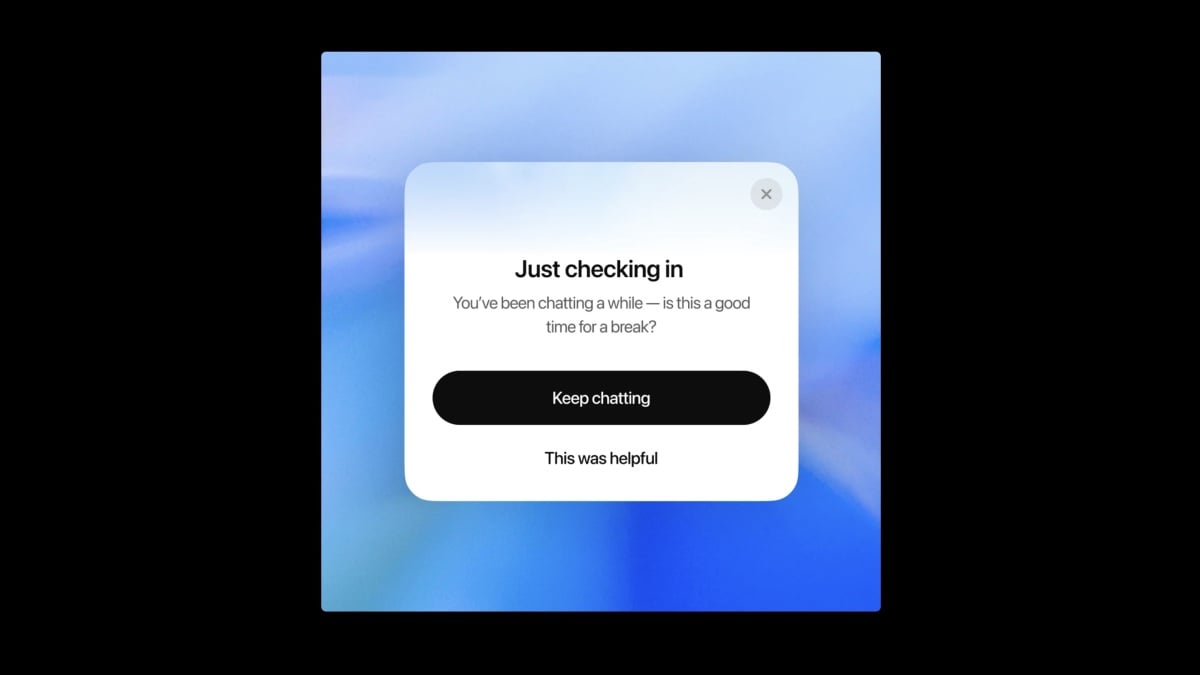

In case your each day routine entails often chatting with ChatGPT, you may be shocked to see a brand new pop-up this week. After a prolonged dialog, you may be introduced with a “Simply checking in” window, with a message studying: “You have been chatting some time—is that this a great time for a break?”

The pop-up provides you the choice to “Maintain chatting,” or to even choose “This was useful.” Relying in your outlook, you would possibly see this as a great reminder to place down the app for some time, or a condescending observe that means you do not know learn how to restrict your personal time with a chatbot.

Do not take it personally—OpenAI would possibly look like they care about your utilization habits with this pop-up, however the true cause behind the change is a bit darker than that.

Hooked on ChatGPT

This new utilization reminder was a part of a better announcement from OpenAI on Monday, titled “What we’re optimizing ChatGPT for.” Within the put up, the corporate says that it values how you utilize ChatGPT, and that whereas the corporate desires you to make use of the service, it additionally sees a profit to utilizing the service much less. A part of that’s via options like ChatGPT Agent, which may take actions in your behalf, but additionally via making the time you do spend with ChatGPT simpler and environment friendly.

That is all properly and good: If OpenAI desires to work to make ChatGPT conversations as helpful for customers in a fraction of the time, so be it. However this is not merely coming from a need to have customers speedrun their interactions with ChatGPT; somewhat, it is a direct response to how addicting ChatGPT will be, particularly for individuals who depend on the chatbot for psychological or emotional help.

You need not learn between the strains on this one, both. To OpenAI’s credit score, the corporate straight addresses severe points a few of its customers have skilled with the chatbot as of late, together with an replace earlier this 12 months that made ChatGPT approach too agreeable. Chatbots are typically enthusiastic and pleasant, however the replace to the 4o mannequin took it too far. ChatGPT would affirm that all your concepts—good, dangerous, or horrible—had been legitimate. Within the worst circumstances, the bot ignored indicators of delusion, and straight fed into these customers’ warped perspective.

OpenAI straight acknowledges this occurred, although the corporate believes these cases had been “uncommon.” Nonetheless, they’re straight attacking the issue: Along with these reminders to take a break from ChatGPT, the corporate says it is bettering fashions to look out for indicators of misery, in addition to steer clear of answering complicated or tough issues, like “Ought to I break up with my associate?” OpenAI says it is even collaborating with consultants, clinicians, and medical professionals in a wide range of methods to get this achieved.

We should always all use AI a bit much less

It is undoubtedly a great factor that OpenAI desires you utilizing ChatGPT much less, and that they are actively acknowledging its points and dealing on addressing them. However I do not assume it is sufficient to depend on OpenAI right here. What’s in the perfect curiosity for the corporate isn’t at all times going to be what’s in your finest curiosity. And, in my view, we may all profit from stepping again from generative AI.

What do you assume thus far?

As an increasing number of folks flip to chatbots for help with work, relationships, or their psychological well being, it is necessary to recollect these instruments aren’t good, and even completely understood. As we noticed with GPT-4o, AI fashions will be flawed, and resolve to start out encouraging harmful methods to pondering. AI fashions also can hallucinate, or, in different phrases, make issues up completely. You would possibly assume the data your chatbot is offering you is 100% correct, however it could be riddled with errors or outright falsehoods—how usually are you fact-checking your conversations?

Trusting AI along with your non-public and private ideas additionally poses a privateness danger, as corporations like OpenAI retailer your chats, and include not one of the authorized protections a licensed medical skilled or authorized consultant do. Including on to that, rising research counsel that the extra we depend on AI, the much less we depend on our personal important pondering expertise. Whereas it could be too hyperbolic to say that AI is making us “dumber,” I would be involved by the quantity of psychological energy we’re outsourcing to those new bots.

Chatbots aren’t licensed therapists; they tends to make issues up; they’ve few privateness protections; and so they might even encourage delusion pondering. It is nice that OpenAI desires you utilizing ChatGPT much less, however we would wish to use these instruments even lower than that.

Disclosure: Lifehacker’s dad or mum firm, Ziff Davis, filed a lawsuit towards OpenAI in April, alleging it infringed Ziff Davis copyrights in coaching and working its AI programs.